Getting Started

The following guide will help you to get started using Connect. Once you have your project ID and API key and you have decided how to model your events, you can start pushing events and executing queries.

Authentication

To push events or execute queries, you must supply the following headers with the request for authentication:

- X-Project-Id - the ID of the project to push to or query.

- X-Api-Key - the push or query API key for the project.

Read more about projects and API keys here.

Pushing events

Pushing events via HTTP is simple. Once you have your project ID and push API key, simply POST the JSON event to your collection endpoint and your events are available for querying immediately.

Single event

POST https://api.getconnect.io/events/:collection

Parameter Description collection collection to which to push the event

To push a single event, POST it to your collection endpoint.

For example, to push an event to test-collection:

curl -X POST \

-H "X-Project-Id: YOUR_PROJECT_ID" \

-H "X-API-Key: YOUR_API_KEY" \

-H "Content-Type: application/json" \

-d '{ "customer": { "firstName": "Tom", "lastName": "Smith" }, "product": "12 red roses", "purchasePrice": 34.95 }' \

https://api.getconnect.io/events/test-collection

Responses

200 OK

The event has been successfully recorded.

422 Unprocessable Entity

One or more errors have occurred with the event data sent.

{

"errors": [

{ "field": "fieldName", "description": "There was an error with this field." }

]

}

413 Request Too Large

The event was to large. Single events cannot be larger than 64kb.

{

"errorMessage": "Maximum event size of 64kb exceeded."

}

400 Bad Request

The event data supplied is not valid.

{

"errorMessage": "Property names starting with tp_ are reserved and cannot be set."

}

500 Internal Server Error

A server error occurred with the Connect API.

{

"errorMessage": "An error occurred while processing your request"

}

Batches of events

Instead of pushing events one by one, you can push events in batches. This is beneficial when you have a number of events to push in a single request or if you wish to push to multiple collections in a single request.

For large batches (over 5,000 events), you must upload the events to S3 and provide a URL to the batch. See bulk importing events for more information.

To push event batches, you must POST a batch containing an object of collections to event batches. For example:

curl -X POST \

-H "X-Project-Id: YOUR_PROJECT_ID" \

-H "X-API-Key: YOUR_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"purchases": [

{

"customer": {

"firstName": "Tom",

"lastName": "Smith"

},

"id": "1849506679",

"product": "12 red roses",

"purchasePrice": 34.95

},

{

"customer": {

"firstName": "Jane",

"lastName": "Doe"

},

"id": "123456",

"product": "1 daisy",

"purchasePrice": 8.95

}

],

"refunds": [

{

"customer": {

"firstName": "Tom",

"lastName": "Smith"

},

"id": "REF-1234",

"product": "12 red roses",

"purchasePrice": -34.95

}

]

}' \

https://api.getconnect.io/events

{

"purchases": [

{

"customer": {

"firstName": "Tom",

"lastName": "Smith"

},

"id": "1849506679",

"product": "12 red roses",

"purchasePrice": 34.95

},

{

"customer": {

"firstName": "Jane",

"lastName": "Doe"

},

"id": "123456",

"product": "1 daisy",

"purchasePrice": 8.95

}

],

"refunds": [

{

"customer": {

"firstName": "Tom",

"lastName": "Smith"

},

"id": "REF-1234",

"product": "12 red roses",

"purchasePrice": -34.95

}

]

}

The above examples inserts events into two separate collections (purchases and refunds) in a single request.

The restrictions on events in the batches are the same as those for pushing a single event.

Batches will always return a successful response unless the overall batch document is poorly formatted or the batch exceeds the limit (5,000 events). The response will contain an array of result objects for each collection, with each array containing the result of each event, in order, that was pushed.

Each result object indicates a boolean of success, a boolean of duplicate (indicate a duplicate event based on the id property) and a message

describing the reason for failure, if applicable.

Batch responses

200 OK

An example response for the above request could be:

{

"purchases": [

{

"success": true

},

{

"success": false,

"message": "An error occurred inserting the event please try again."

}

],

"refunds": [

{

"success": true

}

]

}

500 Internal Server Error

A server error occurred with the Connect API.

{

"errorMessage": "An error occurred while processing your request"

}

Bulk importing events

POST https://api.getconnect.io/event-import

Parameter Description collection collection to which to import the events s3Path path to the S3 file containing the events to import s3AccessKeyId key to access the S3 file s3SecretAccessKey secret key to access the S3 file

To import a large number of events (usually historical), you must use the event import API and provide a file accessible via S3.

If you are importing a small number of events (fewer than 5,000), you can use event batches to push the events in the request body. You're still welcome to use event imports if you prefer, though!

The import requires the name of the collection in which to import the events and the S3 details to retrieve the file.

Only JSON formatted files are supported and the format must be a single event per line (not a JSON array). For example, the following is a valid file to import:

{ "product": "12 red roses", "quantity": 5, "price": 25.00 }

{ "product": "White bouquet", "quantity": 2, "price": 48.40 }

The entire batch will be rejected if the format does not align with this.

For example:

curl -X POST \

-H "X-Project-Id: YOUR_PROJECT_ID" \

-H "X-API-Key: YOUR_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"collection": "purchases",

"s3Path": "s3://mybucket/purchases.json",

"s3AccessKeyId": "YOUR_ACCESS_KEY_ID",

"s3SecretAccessKey": "YOUR_SECRET_ACCESS_KEY"

}' \

https://api.getconnect.io/event-import

{

"collection": "purchases",

"s3Path": "s3://mybucket/purchases.json",

"s3AccessKeyId": "YOUR_ACCESS_KEY_ID",

"s3SecretAccessKey": "YOUR_SECRET_ACCESS_KEY"

}

A successful batch import operation results in a 201 Created response and specifies a URI in the Location header of the response where you can monitor the status of the batch import.

Monitoring the import

GET https://api.getconnect.io/event-import/:id

Parameter Description id ID of the event import (returned from the import endpoint on creation in the Locationheader)

Once you have started an import of events, you can monitor the import with the URI provided in the Location header of the import API.

This allows you to check the status, progress and speed of the import, as well as any errors that have occurred during the import process.

An example response would be:

{

"collection": "purchases",

"status": "running",

"created": "2015-04-01T00:32:45.000Z",

"started": "2015-04-01T00:33:03.000Z",

"finished": null,

"importedEvents": 5130985,

"duplicateEvents": 0,

"percentComplete": 42.43,

"insertRate": "15096/s",

"error": null,

"eventErrors": [

{ "index": 9951, "error": "The JSON specified was not valid." },

{ "index": 45930, "error": "The JSON specified was not valid." }

]

}

An import can be in one of the following statuses:

- Pending - the import is yet to start

- Running - the import is currently running

- Complete - the import completed successfully

- Failed - the import failed (the error will contain the reason)

The eventErrors property in the response refers to an array of errors, with the index property of each error referring to the index (or line number) in the import file.

Restrictions on pushing

There are a number of restrictions on the properties you can use in your events and the limitations on querying which influences how you should structure your events.

Refer to restrictions in the modeling your events section.

Reliability of events

When posting an event to Connect, we guarantee the storage of the event if a successful (200) response is returned. This means you can safely flag the event as recorded.

Note: Events are processed in the background so it is possibe that there will be a slight delay between a successful push response and the event being included in query results.

To guarantee event delivery, it is recommended to queue the events for sending to Connect and only remove them from the queue once a successful response has been received.

Events also allow a custom ID to be sent in the event document which will prevent duplicates (i.e. guarantees idempotence even if the event is delivered multiple times). For example:

{

"customer": {

"firstName": "Tom",

"lastName": "Smith"

},

"id": "1849506679",

"product": "12 red roses",

"purchasePrice": 34.95

}

Timestamps

All events have a single timestamp property which records when the event being pushed occurred. Events cannot

have more than one date/time property. If you feel you need more than one date/time property, you probably need

to reconsider how you're modeling your events.

Querying

You can only run time interval queries or timeframe filters on the

timestampproperty. No other date/time property in an event is supported for querying.

By default, if no timestamp property is sent with the event, Connect will use the current date and time as

the timestamp of the event.

The timestamp, however, can be overridden to, for example, accommodate historical events or maintain accuracy of event times when events are queued.

The timestamp must be supplied as an ISO-8601 formatted date. The date must be in UTC (you cannot specify a timezone offset). For example:

{

"customer": {

"firstName": "Tom",

"lastName": "Smith"

},

"timestamp": "2015-02-05T14:55:56.587Z",

"product": "12 red roses",

"purchasePrice": 34.95

}

Timezones

Timestamps are always recorded in UTC. If you supply a timestamp in a timezone other than UTC, it will be converted to UTC. When you query your events, you can specify a timezone so things like time intervals will be returned in local time.

Querying events

GET https://api.getconnect.io/events/:collection?query=

Parameter Description collection collection to query query (query string parameter) query to run

To query events in Connect, you construct a query document and perform a GET request with the URL-encoded JSON in the query parameter of the query string.

For example, to get the sum of the price property in a collection called purchases, you would build the following query:

{

"select": {

"totalPrice": { "sum": "price" }

}

}

To execute the query, you would run:

curl -H "X-Project-Id: YOUR_PROJECT_ID" \

-H "X-API-Key: YOUR_API_KEY" \

https://api.getconnect.io/events/purchases?query={select:{totalPrice:{sum:'price'}}}

Metadata

The query results return metadata on the executed query. This includes any groups used, the time interval, if specified, and the timezone, if specified, for the query.

For example, if you grouped by product, with a daily time interval and a timezone of Australia/Brisbane, the metadata would look like:

{

"groups": ["product"],

"interval": "daily",

"timezone": "Australia/Brisbane"

}

Metadata is used by our Visualization SDK to discover the shape of your query so that we can easily display the results. If you're doing your own visualization, you can take advantage of the metadata as hints on how to render the results.

Aggregations

You can perform various aggregations over events you have pushed. Simply specify in the query's select which properties you wish to aggregate and which aggregation you wish to use. You also must specify an "alias" for the result set that is returned.

For example, to query the purchases collection and perform aggregations on the price event property::

{

"select": {

"itemsSold": "count",

"totalPrice": { "sum": "price" },

"averagePrice": { "avg": "price" },

"minPrice": { "min": "price" },

"maxPrice": { "max": "price" }

}

}

This would return a result like:

{

"metadata": {

"groups": [],

"interval": null,

"timezone": null

},

{

"itemsSold": 25,

"totalPrice": 5493.25,

"averagePrice": 219.73,

"minPrice": 5.49,

"maxPrice": 589.20

}

}

The following aggregations are supported:

- count

- sum

- avg

- min

- max

Limitations

- Aggregations only work on numeric properties. If you try to aggregate a string property, you will receive a null result. If you try to aggregate a property with multiple types (e.g. some strings, some numbers), only the numeric values will be added - the rest are ignored.

Filters

You can filter the events you wish to include in your queries by specifying one or more filters. For example:

{

"select": {

"totalPrice": { "sum": "price" }

},

"filter": {

"product": "12 red roses"

}

}

The above will filter the query results for only those events that have a product property equalling "12 red roses".

The above is also shorthand for the eq operator. To illustrate this, the following is identical to the above:

{

"select": {

"totalPrice": { "sum": "price" }

},

"filter": {

"product": { "eq": "12 red roses" }

}

}

The following match operators are currently supported:

- eq (equal to)

- neq (not equal to)

- gt (greater than)

- gte (greater than or equal to)

- lt (less than)

- lte (less than or equal to)

- exists (whether or not a property exists)

- startsWith (starts with - string values only)

- endsWidth (ends with - string values only)

- contains (contains - string values only)

- in (property is one of a list of values)

Exists filter

The exists filter will filter the query results for only events that either have or don't have the specified property and where the specified property is or isn't null respectively. You supply a boolean value with the exists operator to specify whether to include or exclude the events.

Missing properties vs null values

We treat missing properties and properties with a null value the same for the purpose of the exists filter. While we plan to change this behavior in the future, you should consider setting a default value as opposed to a null value on properties if you wish to make a distinction.

For example, the following will filter for events that have a property called gender:

{

"select": {

"totalPrice": { "sum": "price" }

},

"filter": {

"gender": { "exists": true }

}

}

Whereas the following will filter for events that do not have a property called gender:

{

"select": {

"totalPrice": { "sum": "price" }

},

"filter": {

"gender": { "exists": false }

}

}

In filter

The in filter allows you to specify a list of values for which a property should match.

For example, the following will filter for events that have a category of either "Bikes", "Books" or "Magazines":

{

"select": {

"totalPrice": { "sum": "price" }

},

"filter": {

"category": { "in": ["Bikes", "Books", "Magazines"] }

}

}

Note: All values in the list must be of the same type (i.e. string, numeric or boolean). Mixed types are currently not supported.

Combining filter expressions

You can also combine filter expressions to filter multiple values on the same property.

For example, the following will filter for events with a price property greater than 5 but less than 10:

{

"select": {

"totalPrice": { "sum": "price" }

},

"filter": {

"price": { "gt": 5, "lt": 10 }

}

}

"Or" filters

Currently, "or" filters are not supported.

Timeframes

You can restrict query results by specifying the timeframe for the query which will filter for events only within that specific timeframe.

If no timeframe is specified, events will not be filtered by time; the query will match events from all time.

For example, the following query filters for events only for this month:

{

"select": {

"totalPrice": { "sum": "price" }

},

"timeframe": "this_month"

}

There are two types of timeframes:

- Relative timeframes - a timeframe relative to the current date and time.

- Absolute timeframes - a timeframe between two specific dates and times.

Relative timeframes

Relative timeframes can be specified as either a string or a complex type containing exact numbers of "periods" to filter.

Timezones

By default, all relative timeframes are UTC by default. See the timezone section to specify your own timezone.

The following are supported string timeframes:

- this_minute

- last_minute

- this_hour

- last_hour

- today

- yesterday

- this_week

- last_week

- this_month

- last_month

- this_quarter

- last_quarter

- this_year

- last_year

You can also specify exactly how many current/previous periods you wish to include in the query results.

For example, if you want to filter by the today and the last 2 days:

{

"select": {

"totalPrice": { "sum": "price" }

},

"timeframe": { "current": { "days": 3 } }

}

Or, to filter by the last 2 months, excluding the current month:

{

"select": {

"totalPrice": { "sum": "price" }

},

"timeframe": { "previous": { "months": 2 } }

}

The following periods are supported for complex, relative timeframes:

- minutes

- hours

- days

- weeks

- months

- quarters

- years

Weeks

Our weeks start on a Sunday and finish on a Saturday. In the future, we plan to support specifying on which day of the week you'd like to start your weeks.

Absolute timeframes

You can specify an absolute timeframe by constructing an object with the start and end properties set to the desired start and end times in ISO-8601 format.

For example:

{

"select": {

"totalPrice": { sum: "price" }

},

"timeframe": { "start": "2015-02-01T00:00:00.000Z", "end": "2015-02-25T00:00:00.000Z" }

}

This will match all events with a timestamp greater than or equal to 2015-02-01 00:00:00 and less than

2015-02-25 00:00:00 UTC.

You can only specify a timezone and an absolute timeframe when you have also specified a time interval. If a timezone is specified on an absolute timeframe query that does not have a time interval an error will occur.

Group by

You can group the query results by one or more properties from your events.

Missing properties vs null values

We treat missing properties and properties with a null value the same for the purpose of grouping. This means that all events with a null value for a property or missing that property altogether will be grouped into the "null" value for that query. While we plan to change this in the future, you should consider setting a default value as opposed to a null value on properties if you wish to make a distinction.

For example, to query total sales by country:

{

"select": {

"totalPrice": { "sum": "price" }

},

"groupBy": "country"

}

This would return a result like:

{

"metadata": {

"groups": ["country"],

"interval": null,

"timezone": null

},

"results": [{

"country": "Australia",

"totalPrice": 1000000

},

{

"country": "Italy",

"totalPrice": 2500000

},

{

"country": "United States",

"totalPrice": 10000000

}]

}

Grouping by multiple properties

You can also group by multiple properties in your events by providing multiple property names.

For example, to query total sales by country and product category:

{

"select": {

"totalPrice": { "sum": "price" }

},

"groupBy": ["country", "product.category"]

}

This would return a result like:

{

"metadata": {

"groups": ["country", "product.category"],

"interval": null,

"timezone": null

},

"results": [{

"country": "Australia",

"product.category": "Bikes",

"totalPrice": 500000

},

{

"country": "Australia",

"product.category": "Cars",

"totalPrice": 500000

},

{

"country": "Italy",

"product.category": "Scooters",

"totalPrice": 2500000

},

{

"country": "United States",

"product.category": "Mobile Phones",

"totalPrice": 8000000

},

{

"country": "United States",

"product.category": "Laptops",

"totalPrice": 2000000

}]

}

Time intervals

Time intervals allow you to group results by a time period, so that you could analyze your events over time.

For example, to query daily total sales this month:

{

"select": {

"totalPrice": { "sum": "price" }

},

"timeframe": "this_month",

"interval": "daily"

}

This would return a result like:

{

"metadata": {

"groups": [],

"interval": "daily",

"timezone": null

},

"results": [{

"interval": { "start": "2015-02-01T00:00:00Z", "end": "2015-02-02T00:00:00Z" },

"results": [{

"totalPrice": 500000

}]

},

{

"interval": { "start": "2015-02-02T00:00:00Z", "end": "2015-02-03T00:00:00Z" },

"results": [{

"totalPrice": 150000

}]

},

{

"interval": { "start": "2015-02-03T00:00:00Z", "end": "2015-02-04T00:00:00Z" },

"results": [{

"totalPrice": 25000

}]

}]

}

The following time intervals are supported:

- minutely

- hourly

- daily

- weekly

- monthly

- quarterly

- yearly

Time interval with group by

You can also combine a time interval with a group by in your query.

For example, to query daily total sales this month by country:

{

"select": {

"totalPrice": { "sum": "price" }

},

"timeframe": "this_month",

"interval": "daily",

"groupBy": "country"

}

This would return a result like:

{

"metadata": {

"groups": ["country"],

"interval": "daily",

"timezone": null

},

"results": [{

"interval": { "start": "2015-02-01T00:00:00Z", "end": "2015-02-02T00:00:00Z" },

"results": [

{

"country": "Australia",

"totalPrice": 100000

},

{

"country": "Italy",

"totalPrice": 100000

},

{

"country": "United States",

"totalPrice": 300000

}

]

},

{

"interval": { "start": "2015-02-02T00:00:00Z", "end": "2015-02-03T00:00:00Z" },

"results": [

{

"country": "Australia",

"totalPrice": 25000

},

{

"country": "Italy",

"totalPrice": 25000

},

{

"country": "United States",

"totalPrice": 100000

}

]

},

{

"interval": { "start": "2015-02-03T00:00:00Z", "end": "2015-02-04T00:00:00Z" },

"results": [

{

"country": "Australia",

"totalPrice": 5000

},

{

"country": "Italy",

"totalPrice": 5000

},

{

"country": "United States",

"totalPrice": 15000

}

]

}]

}

Timezones

By default, Connect uses UTC as the timezone for queries with relative timeframes or time intervals. You can override this to be the timezone of your choice by specifying the timezone in your query.

You can only specify a timezone when you have specified a time interval and/or a relative timeframe. If you try to specify a timezone without one of these set, an error will be returned.

You can specify a numeric (decimal) value for an hours offset of UTC, for example:

{

"select": {

"totalPrice": { "sum": "price" }

},

"timezone": 10

}

You can also specify a string which contains an IANA time zone identifier, for example:

{

"select": {

"totalPrice": { "sum": "price" }

},

"timezone": "Australia/Brisbane"

}

Error handling

The following error responses may be returned from the API:

422 Unprocessable Entity

The query was not in the correct format. The message body will contain a list of errors, for example:

{

"errors": [

{

"field": "query",

"description": "The query JSON supplied is not valid."

}

]

}

413 Too Many Results

Queries result sets are limited to 10,000 results. If you exceed this threshold, you will receive this error.

Ideally, queries should return a small number of results, even if they are aggregating a large number of events. You should reconsider the way in which you are querying if you are hitting this limit.

500 Internal Server Error

An internal server error occurred in the Connect API.

Exporting events

Connect allows you to export the raw events that were pushed to a collection.

You can export up to 10,000 events and receive an immediate response, or export up to 1,000,000 events to Amazon S3.

Small exports

GET https://api.getconnect.io/events/:collection/export?query=

Parameter Description collection collection to export query (query string parameter) query to run

For exports of up to 10,000 events, you can provide a query and receive an immediate response with the raw events.

The query specified only supports filters and timeframes with the same format as the normal query language, for example:

{

"filter": {

"product": "apple"

},

"timeframe": "today"

}

The following responses may occur:

200 OK

The export was successful. The body of the response contains the raw events, for example:

{

"results": [

{

"id": "9eb91561-1964-468a-b6bf-c16034b6757c",

"timestamp": "2015-07-28T00:00:30.295Z",

"name": "test"

},

{

"id": "28c86f2e-4ad8-423a-85ea-b09a5ab9549d",

"timestamp": "2015-07-28T00:00:52.549Z",

"name": "test"

}

]

}

422 Unprocessable Entity

The query supplied is not valid.

413 Too Many Results

The query supplied resulted in over 10,000 events, which is not supported. To export these events you can export to Amazon S3 (up to 1,000,000 events).

500 Internal Server Error

An internal server error occurred in the Connect API.

Exporting to Amazon S3

POST https://api.getconnect.io/events/:collection/export

Parameter Description collection collection to export query query to run s3Path the S3 path where the export will be uploaded s3AccessKeyId key to access the S3 bucket s3SecretAccessKey secret key to access the S3 bucket

You can export up to 1,000,000 events to Amazon S3. The results of the export will be uploaded to the S3 path you specify, using the credentials specified.

You must POST these options in JSON format to the above URL, for example:

{

"query": {

"timeframe": "today"

},

"s3Path": "https://my-bucket.s3.amazonaws.com/exports/",

"s3AccessKeyId": "NOT_TELLING",

"s3SecretAccessKey": "NOT_TELLING"

}

The query specified only supports filters and timeframes with the same format as the normal query language.

A unique ID is generated for every export. This ID is used to monitor the export and as the filename for the export (unique_id.json). The exported file will contain one line per event.

The following responses may occur:

201 Created

The export was successfully created. The Location header will contain the URL with which you can

monitor the export.

422 Unprocessable Entity

The query supplied is not valid.

500 Internal Server Error

An internal server error occurred in the Connect API.

Monitoring the export

GET https://api.getconnect.io/events/:collection/export/:id

Parameter Description collection collection being exported id unique ID of the export (from the Location header of a successful export

Once you have successfully created an export, the Location header will provide a URL to monitor

the export.

This allows you to check the status and progress of an export, as well as any errors that may occur during the export process.

For example:

{

"filename": "55b80dd4eb6742481c62c05e.json",

"collection": "testing",

"status": "Pending",

"created": "2015-07-28T23:18:44.698Z",

"started": null,

"finished": null,

"error": null,

"percentDone": 0,

"transferredBytes": 0,

"totalBytes": 0

}

| Property | Description |

|---|---|

| filename | the filename being uploaded to Amazon S3 (to the path specified in the export request) |

| collection | the collection being exported |

| status | the status of the export |

| created | the date the export was created (in UTC) |

| started | the date the export started (in UTC, null if the status is Pending) |

| finished | the date the export finished (in UTC, null if the status is Pending or Running) |

| error | an error message indicating the reason for failure, if the status is Failed |

| percentDone | a number (0 to 100) indicating the progress of the export |

| transferredBytes | the number of bytes transferred to Amazon S3 so far |

| totalBytes | the total number of bytes to transfer to Amazon S3 (i.e. the size of the export) |

An export can be in one of the following statuses:

- Pending - the export is yet to start

- Running - the export is currently running

- Complete - the export completed successfully

- Failed - the export failed (the error will contain the reason)

Deleting collections

DELETE https://api.getconnect.io/events/:collection

Parameter Description collection collection to delete

You can delete entire collections by sending a DELETE request to the collection resource URL.

This is an irreversible action. Once a collection is deleted, all events that were pushed to that collection are also deleted. You should use this operation carefully.

Projects and keys

Connect allows you to manage multiple projects under a single account so that you can easily segregate your collections into logical projects.

You could use this to separate analytics for entire projects, or to implement separation between different environments (e.g. My Project (Prod) and My Project (Dev)).

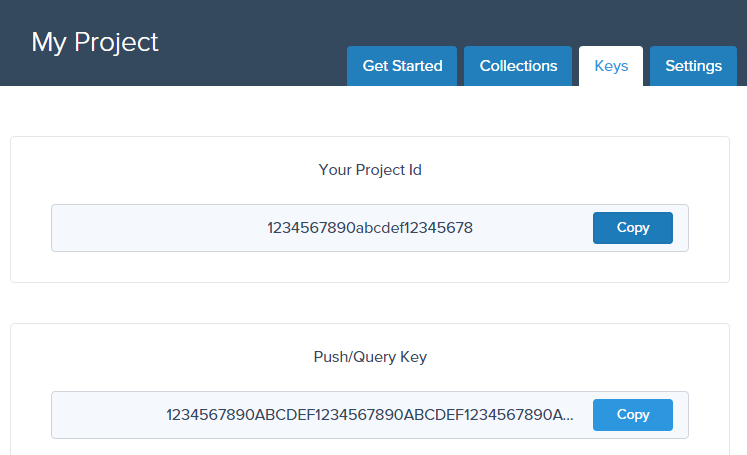

To start pushing and querying your event data, you will need both a project ID and an API key. This information is available to you via the admin console inside each project under the "Keys" tab:

By default, you can choose from four different types of keys, each with their own specific use:

Push/Query Key- you can use this key to both push events and execute queries.

You should only use this key in situations where it is not possible to isolate merely pushing or querying.Push Key- you can only use this key to push events.

You should use this key in your apps where you are tracking event data, but do not require querying.Query Key- you can only use this key to execute queries.

You should use this key in your reporting interfaces where you do not wish to track events.Master Project Key- you can use this key to execute all types of operations on a project, including pushing, querying and deleting collections.

Keep this key safe - it is intended for very limited use and definitely should not be included in your main apps.

You must use your project ID and desired key to begin using Connect:

curl -X POST \

-H "X-Project-Id: YOUR_PROJECT_ID" \

-H "X-API-Key: YOUR_API_KEY" \

-H "Content-Type: application/json" \

-d '{ "customer": { "firstName": "Tom", "lastName": "Smith" }, "product": "12 red roses", "purchasePrice": 34.95 }' \

https://api.getconnect.io/events/test-collection

Security

Security is a vital component to the Connect service and we take it very seriously. It is important to consider how to ensure your data remains secure.

API Keys

API keys are the core security mechanisms by which you can push and query your data. It is important to keep these keys safe by controlling where these keys exist and who has access to them.

Each key can either push, query or both. The most important key is the Project Master Key which can perform all of

these actions, as well as administrative functions such as deleting data. Read more about the keys here.

Keeping API Keys Secure

You should carefully consider when and which API keys to expose to users.

Crucially, you should never expose your Project Master Key to users or embed it in client applications.

If this key does get compromised, you can reset it.

If you embed API keys in client applications, you should consider these keys as fully accessible to anyone having access to that client application. This includes both mobile and web applications.

Pushing events securely

While you can use a Push Key to prevent clients from querying events, you cannot restrict the collections or events

clients can push to the API. Unfortunately, this is the nature of tracking events directly client-side and opens the

door to malicious users potentially sending bad data.

In many circumstances, this is not an issue as users can already generate bad data simply by using your application in an incorrect way, generating events with bad or invalid data. In circumstances where you absolutely cannot withstand bad event data, you should consider pushing the events server-side from a service under your control.

Finally, if a Push Key is compromised or being used maliciously, you can always reset it by resetting the master key.

Querying events securely

To query events, you must use an API key that has query permissions. By default, a Query Key has full access to all

events in all collections in your project. If this key is exposed, a client could execute any type of query on your

collections.

You have a number of options on querying events securely:

For internal querying or dashboard, you may consider it acceptable to expose the normal

Query Keyin client applications. Keep in mind that this key can execute any query on any collection in the project.Generate a filtered key, which applies a specific set of filters to all queries executed by clients with the key.

Only allow clients to execute queries via a service you control, which in turn executes queries via the Connect API server-side.

Finally, if a Query Key is compromised or being used maliciously, you can always reset it by resetting the master key.

Resetting the master key

Resetting the Project Master Key will invalidate the previous key and generate a new, random key. This action will also

reset all other keys for the project (including the push, query and any filter keys generated).

Doing this is irreversible and would prevent all applications with existing keys from pushing to or querying the project.

You can only reset the master key in the projects section of the admin console.

Filtered keys

Filtered keys allows you to create an API key that can either push or query, and in the case of querying, apply one or more filters to all queries executed with the key.

This allows you to have finer control over security and what data clients can access, especially in multi-tenant environments.

Filters are only applied to queries

Any filters specified in your filtered key only apply to querying. We currently do not support applying filters to restrict the pushing of events.

Filtered keys can only push or query (as you specify), never administrative functions or deleting data.

Generating a filtered key

Filtered keys are generated and encrypted with the Project Master Key. You do not have to register the

filtered key with the Connect service.

It is important that you never generate filtered keys client-side and always ensure the are generated by a secure, server-side service.

Create a JSON object describing the access allowed by the key, including any filters, for example:

{ "filters": { "customerId": 1234 }, "canQuery": true, "canPush": false }Property Type Description filtersobjectThe filters to apply all queries executed when using the key. This uses the same specification for defining filters when querying normally. canQuerybooleanWhether or not the key can be used to execute queries. If false, thefiltersproperty is ignored (as it does not applying to pushing).canPushbooleanWhether or not the key can be used to push events. Serialize the JSON object to a string.

Generate a random 128-bit initialization vector (IV).

Encrypt the JSON string using AES256-CBC with PKCS7 padding (128-bit block size) using the 128-bit random IV we generated in the previous step and the

Project Master Keyas the encryption key.Convert the IV and cipher text to hex strings and combine them (IV first, followed by the cipher text), separating them with a hyphen (-). The resulting key should look something like this:

5D07A77D87D5B20FA5508303F748A43B-DDFA284A9341068A704A846E83ACF49069D960632C4F74A44B5EE330073F79A8324ADC91023F88F63AAE4507F3D119B5C7F31A2D7D9616408E9665EC6C1DEBE3

You can now use this API key to either push events or execute queries depending on the canPush

and canQuery properties, respectively.

Finally, if a filtered key is compromised or being used maliciously, you can always reset it by resetting the master key.

Modeling your events

When using Connect to analyze and visualize your data, it is important to understand how best to model your events. The way you structure your events will directly affect your ability to answer questions with your data. It is therefore important to consider up-front the kind of questions you anticipate answering.

What is an event?

An event is an action that occurs at a specific point in time. To answer "why did this event occur?", our event needs to contain rich details about what the "world" looked like at that point in time.

Put simply, events = action + time + state.

For example, imagine you are writing an exercise activity tracker app. We want to give users of your app the ability to analyze their performance over time. This is an event produced by our hypothetical activity tracker app:

{

"type": "cycling",

"timestamp": "2015-06-23T16:31:56.587Z",

"duration": 67,

"distance": 21255,

"caloriesBurned": 455,

"maxHeartRate": 182,

"user": {

"id": "638396",

"firstName": "Bruce",

"lastName": "Jones",

"age": 35

}

}

Action

What happened? In the above example, the action is an activity was completed.

In most circumstances, we group all events of the same action into a single collection.

In this case, we could call our collection activityCompleted, or alternatively, just activity.

Time

When did it happen? In the above example, we specified the start time of the activity as the value of the timestamp property. The top-level timestamp property is a special property in Connect. This is because time is an essential property of event data - it's not optional.

When an event is pushed to Connect, the current time is assigned to the timestamp property if no value was provided by you.

State

What do we know about this action? What do we know about the entities associated with this action? What do we know about the "world" at this moment in time? Every property in our event, besides the timestamp and the name of the collection, serves to answer those questions. This is the most important aspect of our event - it's where all the answers live.

The richer the data you provide in your event, the more questions you can answer for your users, therefore it's important to enrich your events with as much information as possible. In stark contrast to the relational model where you would store this related information in separate tables and join at query time, in the event model this data is denormalized into each event, so as to know the state of the "world" at the point in time of the event.

Collections

It is important when modeling your events to consider how you intend to group those events into collections. This is a careful balance between events being broad enough to answer queries for your users, while specific enough to be manageable.

In our activity example, the activity contains different properties based on what the type of activity. Our cycling activity contains properties associated with the bike that was used, while a kayaking activity may contain properties associated with a kayak that is used.

Because a kayaking event may have different properties to a running event, it might seem logical to put each of them in distinct collections. However, if we

had distinct cycling, running and kayaking collections, we would lose the opportunity to query details that are common to all activities.

As a general rule, consider the common action among your events and decide if the specific variants of that action warrant grouping those events together.

Structuring your events

Events have the following core properties:

- Denormalized

- Immutable

- Rich/nested

- Schemaless

It is also important to consider how to group events into collections to enable future queries to be answered.

Events are denormalized

Consider our example event again, notice the age property of the user:

{

"type": "cycling",

...

"user": {

"id": "638396",

"firstName": "Bruce",

"lastName": "Jones",

"age": 35

}

}

The user's age is going to be duplicated in every activity he/she completes throughout the year. This may seem inefficient; however, remember that Connect is about analyzing. This denormalization is a real win for analysis; the key is that event data stores state over time, rather than merely current state. This helps us answer questions about why something happened, because we know what the "world" looked like at that point of time.

For example imagine we wanted to chart the average distance cycled per ride, grouped by the age of the rider at the time of the ride. We could simply execute the following query:

{

"select": { "averageDistance": { "avg": "distance" } },

"groupBy": "user.age"

}

It's this persistence of state over time that makes event data perfect for analysis.

Events are immutable

By their very nature, events cannot change, as they always record state at the point in time of the event. This is also the reason to record as much rich information about the event and "state of the world" as possible.

For example, in our example event above, while Bruce Jones may now be many years older, at the time he completed his bike ride, he was 35 years of age. By ensuring this event remains immutable, we can correctly analyze bike riding over time by 35-year-olds.

Consider events as recording history - as much as we'd occasionally like to, we can't change history!

Events are rich and nested

Events are rich in that they specify very detailed state. They specify details about the event itself, the entities involved and the state of the "world" at that point in time.

Consider our example activity event - the top level type property describes something about the activity itself (a run, a bike ride, a kayak etc.). The user property specifies rich information about the actor who performed the event. In this case it's the person who completed the activity, complete with their name and age.

In reality, though, we may decide to include a few other nested entities in our event, for example:

{

"type": "cycling",

...

"user": {

"id": "638396",

"firstName": "Bruce",

...

},

"bike": {

"id": "231806",

"brand": "Specialized",

"model": "S-Works Venge"

},

"weather": {

"condition": "Raining",

"temperature": 21,

"humidity": 99,

"wind": 17

}

}

Note our event now includes details about the bike used and the weather conditions at the time of the activity. By adding this extra bike state information to our event, we have opened up extra possibilities for interrogating our data. For example, we can now query the average distance cycled by each model of bike that was built by "Specialized":

{

"select": { "averageDistance": { "avg": "distance" } },

"groupBy": "bike.model",

"filter": { "bike.brand": "Specialized" }

}

The weather also provides us with exciting insights - what did the world look like at this point in time? What was the weather like? Storing this data allows us to answer yet more questions. We can test our hypothesis that "older people are less scared of riding in the rain" by simply charting the following query:

{

"select": { "averageDistance": { "avg": "distance" } },

"groupBy": ["user.age", "weather.condition"]

}

As you can see, the richer and more denormalized the event, the more interesting answers can be derived when later querying.

Events are schemaless

Events in Connect should be considered semi-structured - that is, they have an inherent structure, but it is not defined. This means you can, and should, push as much detailed information about an event and the state of the "world" as possible. Moreover, this allows you to improve your schema over time and add extra information about new events as that information becomes available.

Restrictions

While you can post almost any event structure to Connect, there are a few, by-design restrictions.

Property names

You cannot have any property in the root document beginning with "tp_". This is because we prefix our own internal properties with this. Internally, we merge our properties into your events for performance at query time.

The property "_id" is reserved and cannot be pushed.

The properties "id" and "timestamp" have special purposes. These allow consumers to specify a unique ID per event and override the event's timestamp respectively. You cannot use the "id" property in queries. Refer to "reliability of events" and "timestamps" for information.

The length of property names can't exceed 255 characters. If you need property names longer than this, you probably need to reconsider the structure of your event!

Properties cannot include a dot in their names. This is because dots are used in querying to access nested properties. The following is an example of an invalid event property due to a dot in the name:

{

"invalid.property": "value"

}

Arrays

While you can create events with arrays, it is currently not possible to take advantage of these arrays at query time. Therefore, you should avoid using arrays in your events unless you plan to export the raw events.

Distinct count

Distinct count is currently not supported for querying, therefore you should consider how to structure your event if your application relies on this.